GPU Farms: Powering AI Possibilities

Enterprise-grade GPU clusters for high-speed AI model training, inference, and real-time analytics.

Why Choose GPU Clusters?

⚡ Parallel processing slashes training time from weeks to hours

🌐 Scales seamlessly across edge, cloud, and hybrid models

🧪 Optimised for TensorFlow, PyTorch, and ONNX out of the box

🌿 Sits inside carbon-neutral data centres

🔒 InfiniBand and 100 Gb Ethernet for ultra-low latency

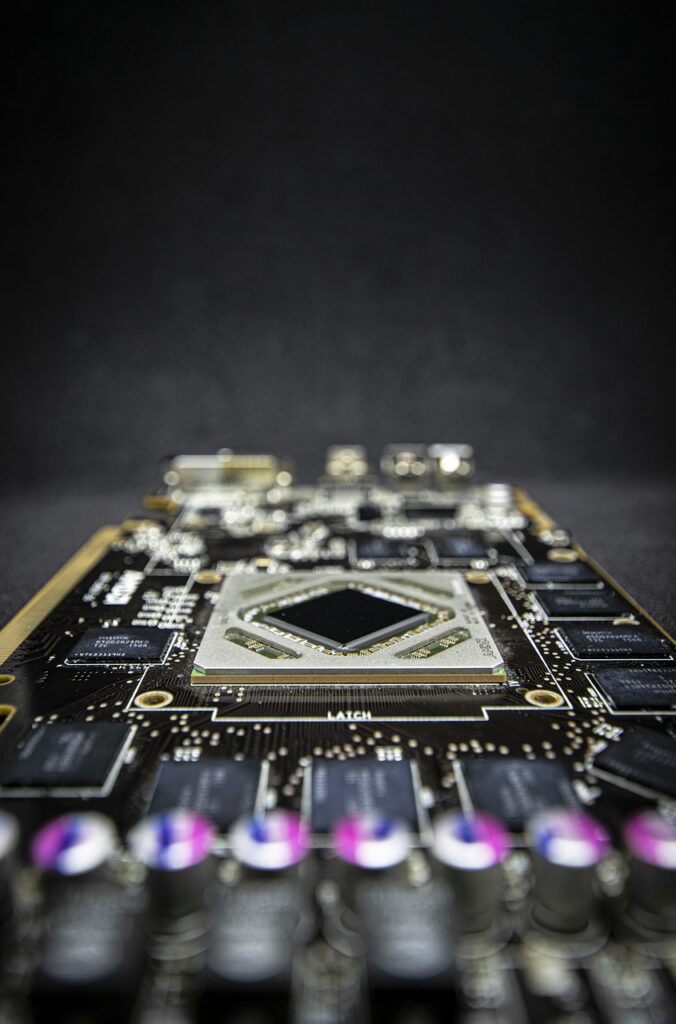

High-Performance GPU Infrastructure

Scalable, carbon-neutral, and optimized for AI workloads

10× Faster vs CPU

🔥 1. Raw Compute Power – Multi-GPU Clusters

At the heart of Shankar AI’s intelligence lies a roaring engine of performance—multi-GPU clusters engineered to deliver petaflops of training throughput. Designed for next-gen AI workloads, these compute nodes are stacked with the latest NVIDIA H100s, tensor cores, and parallel-processing pipelines, ready to take on billion-parameter models with ease.

Whether it’s generative models, reinforcement learning, or medical simulations, our compute farm accelerates breakthroughs with:

⚙️ Seamless workload orchestration

🧠 Ultra-deep neural network training

🔁 Continuous fine-tuning at scale

Raw speed meets raw intelligence. Welcome to a powerhouse built for tomorrow’s thinking.

⚡ 2. Ultra-Low Latency – Powered by InfiniBand and 100Gb Ethernet

Time is the true cost in AI. And we’ve eliminated lag with InfiniBand networking and 100Gbps Ethernet backbones. Our architecture is designed for sub-millisecond latency, enabling real-time collaboration between AI modules, distributed training nodes, and live inference endpoints.

Key features:

🚀 Near-zero jitter across clusters

🔗 Seamless high-speed data replication

📡 Real-time response for edge and cloud fusion

Think faster. Train smarter. Deploy instantly.

🌿 3. Eco-Efficient Design – Carbon-Neutral, Optimized Data Centers

Where most see heat, we see harmony. Our AI infrastructure runs in energy-optimized data centers powered by solar, wind, and hydro integration—wrapped in an eco-efficient blueprint that minimizes environmental impact.

Each node operates within:

🌞 Solar-powered GPU racks

♻️ Biodegradable modular hardware

🌱 Smart cooling & airflow zoning systems

Certified under global green computing standards and powered by 100% renewable energy, our eco-efficient design delivers sustainability without compromising performance.

AI with a conscience. Innovation with a footprint so small, it’s barely there.

Ready to Accelerate Your AI Journey?

Whether you’re building the next breakthrough model or modernizing legacy systems — SystemBaseLabs GPU farms are ready for your mission.

We respond within 24 hours. Ask us anything.